Multimodal Agent-Signage Detection for Wayfinding

Solving Unity Environment with Deep Reinforcement Learning

Deep RL is a subset of machine learning that combines deep learning and reinforcement learning. It is a powerful technique that has been used to solve complex tasks in various domains, including robotics, finance, and gaming. In recent years, DRL has become a popular approach to building intelligent game agents that can learn from experience and adapt to new situations.

Objective

This project has the objective to first train an Agent using Deep Q Learning. The agent will be trained to follow visual signs while avoiding other agents in the ebvironment to reach a desired goal position inspired from Unity’s Hallway environment.

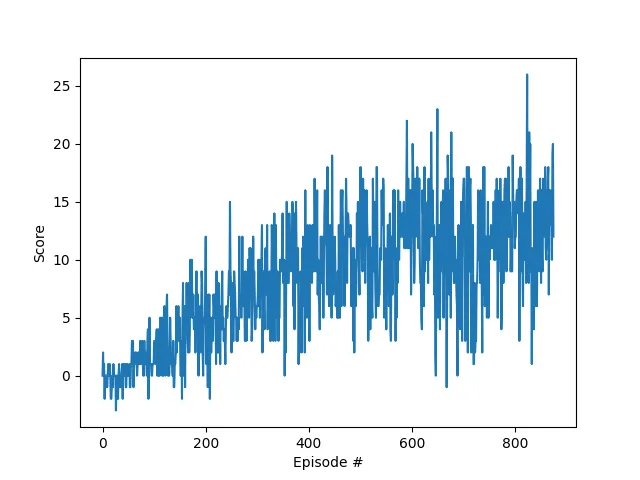

The agent was trained using a Deep Q Learning algorithm and was able to solve the environment in less than 1000 episodes.

As a next step aimed at tackling a different problem, we made the environment itself a participating agent in the environment. Using the feedback recieved from the agent trying to navigate the environment, using basic architectural rules and procedural environment generation techniques, we tried to optimize the layout of the environment so as to optimize:

- Efficient usage of space

- Point-to-Point Navigation and Evacuation times

- Efficient usage of construction materials

- Lighting and Visibility etc.

Enviroment & Task

The environment consists of a square world layout with corridors and pathways leading to four different randomly placed goal positions in the environment. The pathways are lined with signage indicating the directions to each of the goals. The agent has the objective to reach the goal position in minimum time steps without colliding with other agents in the environment. The agent has 4 possible actions: move forward, move backward, turn left and turn right.

The state space has 37 dimensions and contains the agent’s velocity, along with ray-based perception of objects around the agent’s forward direction. A reward of +1 is provided for reaching the goal, and a reward of -1 is provided for colliding with another agent and/or rigid objects in the environment. In addition, there’s a constant time penalty and other experimental rewards/punishments based on evolving heuristics.

The task is episodic, and in order to solve the environment, the agent must get an average score of +0.9 over 100 consecutive episodes.

The Agent

To solve the problem given by the environment, we used a Deep Q Learning algorithm. The algorithm is based on the paper Human-level control through deep reinforcement learning by DeepMind.

The algorithm works by using a neural network to approximate the Q-Function. The neural network receives the state as input and outputs the Q-Value for each action. Then it uses the Q-Value to select the best action to be taken by the agent. The algorithm learns by using the Q-Learning algorithm to train the neural network. There are also two problems with a simple implementation of the algorithm: correlated experiences and correlated targets. The algorithm uses two techniques to solve these problems: Experience Replay and Fixed Q-Targets.

Correlated experiences

Correlated experiences refer to a situation where the experiences (or transitions) of an agent are correlated with each other, meaning they are not independent and identically distributed. This can lead to an overestimation of the expected reward of a particular state or action, resulting in poor performance or convergence to suboptimal policies.

To solve this problem, we use a technique called Experience Replay. The technique consists in storing the experiences of the agent in a replay buffer and sampling randomly from it to train the neural network.

Correlated targets

Correlated targets refer to a situation where the target values used to update the policy are not independent of each other, leading to correlation in the learning signal. This can slow down or prevent convergence to the optimal policy.

To solve this problem, we use a technique called Fixed Q-Targets. The technique consists of using two neural networks: the local network and the target network. The local network is used to select the best action to be taken by the agent while the target network is used to calculate the target value for the Q-Learning algorithm. The target network is updated every 4 steps with the weights of the local network.

Neural network architecture

The neural network architecture used in the algorithm is a simple fully connected neural network with 2 hidden layers. The input layer has 37 neurons, the output layer has 4 neurons and the hidden layers have 64 neurons each. The activation function used in the hidden layers is ReLU and the activation function used in the output layer is the identity function.

The optimizer used for this implementation is Adam with a learning rate of 0.0005.

The library used to implement the neural network was PyTorch.

Training Task

To train the agent we used a loop to interact with the environment, collect and learn from the experiences. The plot below shows the progress of the agent in obtaining higher rewards.

Here we can see the rewards increase as the agent improves. The tradeoff between exploration and exploitation is also visible in the plot, where the agent explores more in the first 200 episodes and then starts to exploit the environment and get higher rewards.

Procedural Environment Generation

Next, we used the Wavefunction Collapse Algorithm to procedurally generate the environment based on some primitive building blocks. As discussed, the motivation for this is to eventually use the agents feedback from the wayfinding example above as an input during environment generation.